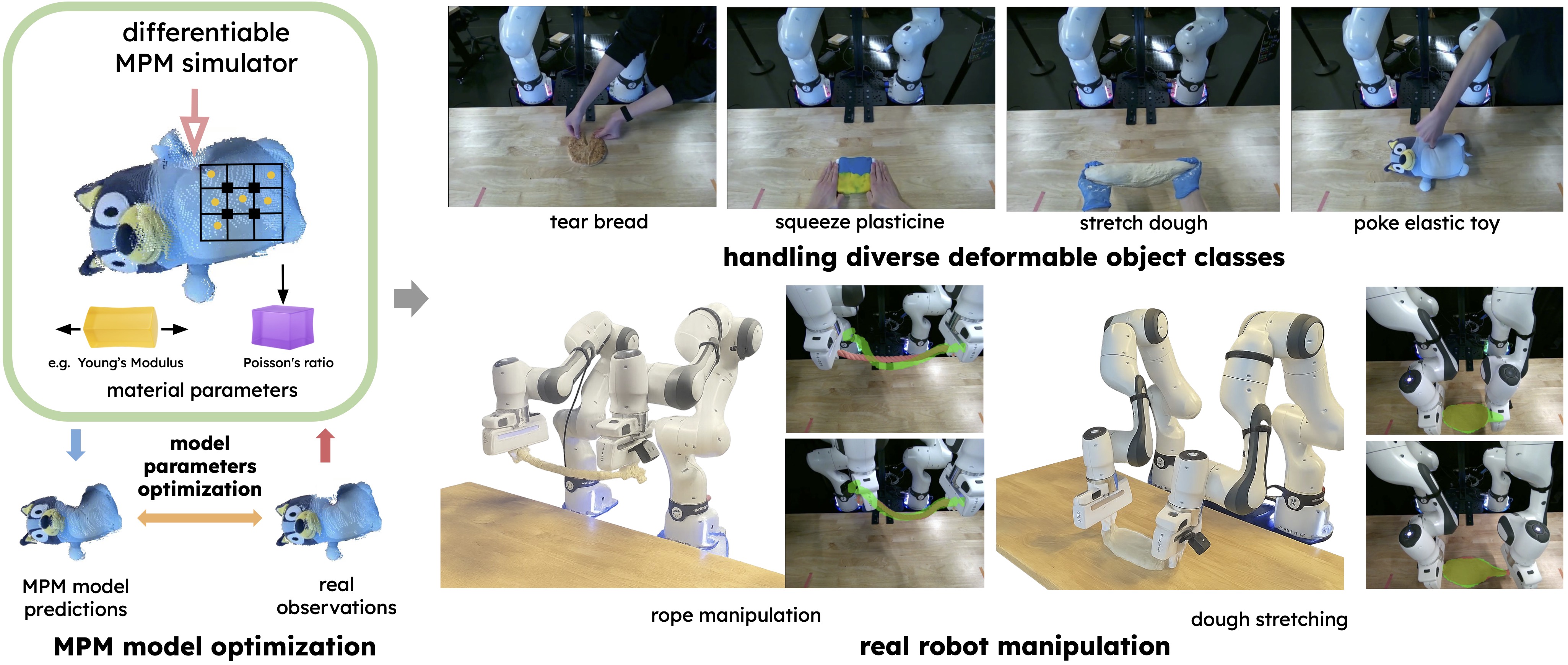

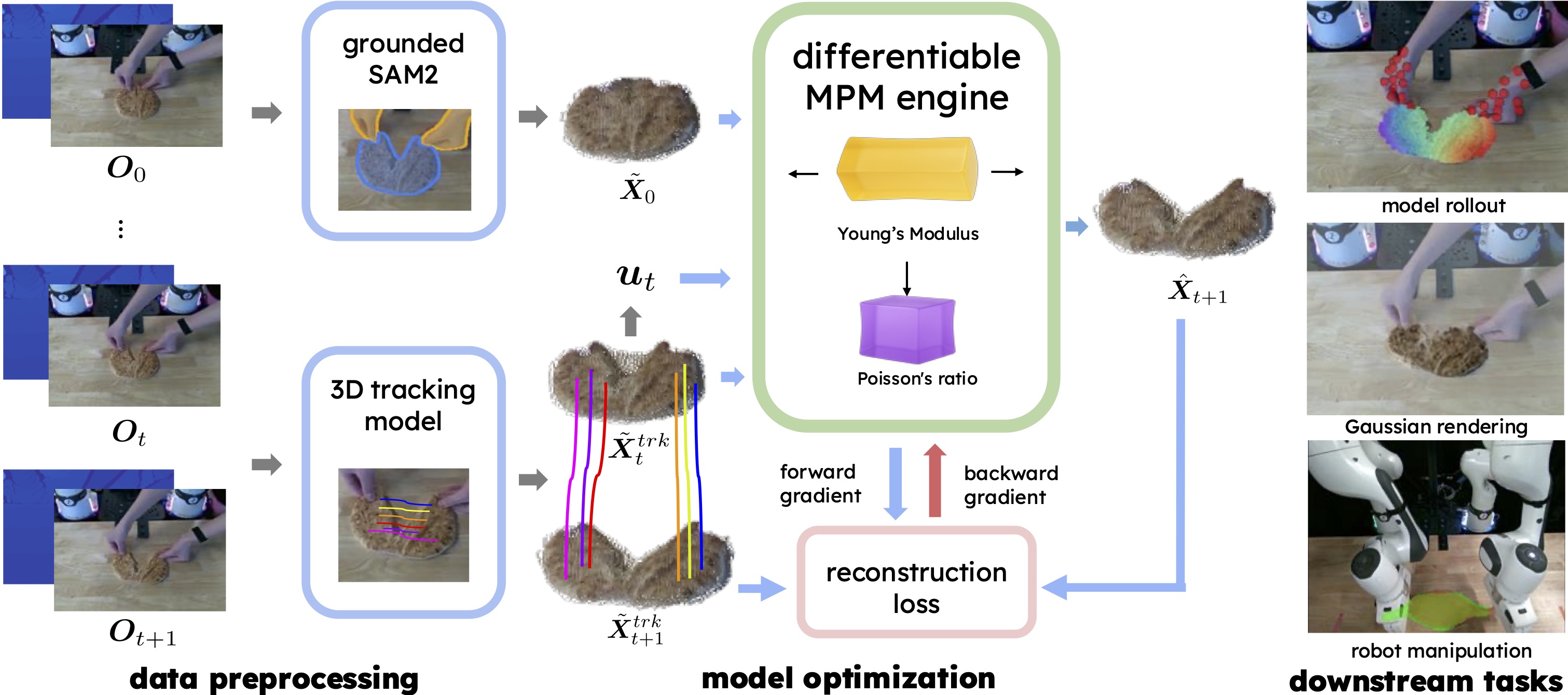

Modeling deformable objects -- especially continuum materials -- in a way that is physically plausible, generalizable, and data-efficient remains challenging across 3D vision, graphics, and robotic manipulation. Many existing methods oversimplify the rich dynamics of deformable objects or require large training sets, which often limits generalization. We introduce embodied MPM (EMPM), a deformable object modeling and simulation framework built on a differentiable Material Point Method (MPM) simulator that captures the dynamics of challenging materials. From multi-view RGB-D videos, our approach reconstructs geometry and appearance, then uses an MPM physics engine to simulate object behavior by minimizing the mismatch between predicted and observed visual data. We further optimize MPM parameters online using sensory feedback, enabling adaptive, robust, and physics-aware object representations that open new possibilities for robotic manipulation of complex deformables. Experiments show that EMPM outperforms spring-mass baseline models.

Method Overview. Our MPM simulation engine takes a reconstructed pointcloud and tracked 3D points as inputs. The model parameters are optimized using the discrepancy between the model's prediction and 3D shape reconstruction and tracking. The control inputs applied to the dynamics model of MPM is computed as the velocity extracted from the reconstructions. In the inference stage, we test the model's performance in terms of predicting 3D point positions and RGB image rendering of the 3D Gaussian splats.

We perform offline simulation and optimzation of objects using collected RGB-D videos.

Collected Video

Offline Simulation Twin

Stretch dough

Collected Video

Offline Simulation Twin

Squeeze plasticine

Collected Video

Offline Simulation Twin

Tear bread

Collected Video

Offline Simulation Twin

Manipulate rope

Collected Video

Offline Simulation Twin

Lift cloth

We perform online simulation and optimization using real-time sensory data.

In the simulation clip, the red part is the segmented object, and the green part is the simulation overlay.

Streaming Video

Online Simulation Twin

Manipulate rope

Streaming Video

Online Simulation Twin

Manipulate bread dough

We showcase model rollouts in which optimal controller actions are explored in simulation while the robot tracks the simulated rollout to execute the task.

In the simulation clip, the red part is the segmented object, and the green part is the simulation overlay.

Simulation Rollout

Robot Execution

Manipulate rope

Simulation Rollout

Robot Execution

Manipulate bread dough

We compare our method with PhysTwin.

GT

Ours

PhysTwin

Tear bread

GT

Ours

PhysTwin

Squeeze plasticine

GT

Ours

PhysTwin

Manipulate rope

@article{chen2026empm,

title={EMPM: Embodied MPM for Modeling and Simulation of Deformable Objects},

author={Yunuo Chen and Yafei Hu and Lingfeng Sun and Tushar Kusnur and Laura Herlant and Chenfanfu Jiang},

journal={arXiv preprint arXiv:2601.17251},

year={2026},

}